1. Summary

Like most institutions, university storage requirements are always increasing.

Fortunately storage hardware prices are dropping rapidly, currently about 25-30 cents/GB

for raw SATA disk storage. Multi-core motherboards with multiple ethernet interfaces are

now commonplace and full high-performance (albeit generic) storage systems can be had for

less than $1000/TB. We refer to these devices as Storage Bricks.

In contrast, prices for proprietary, integrated, highly reliable storage systems are still

quite high, approximately 4-8x the cost of the generic Storage Brick. The differences

between such generic Storage Bricks and the products offered by high end commercial

storage vendors such as Network Appliance,

EMC, or BlueArc are in both proprietary

hardware such as bus structure, and hardware accelerators, and software such as

proprietary advanced Logical Volume Managers. Such software adds specialized features

like snapshotting, volume migration, on-the-fly data rearrangement, RAID idling,

special-purpose error checking, and more. There is also something of a lifestyle choice

in choosing one of the proprietary vendors as you will have so much investment in that

particular proprietary path that it will be difficult to change. To do so will be not

unlike a divorce.

However, much of the storage that a research university requires maps well to lower-end

hardware, especially if administratively, smaller departments or even labs are responsible

for their own storage requirements. As such, there is little advantage in investing in the

expensive base costs of the proprietary servers mentioned above. Therefore, I set out to

see how much performance and reliability could be bought for the least dollar.

An 8-port and a 16-port hardware PCI-e RAID controller from Areca and 3ware were

tested under variety of configurations and applications in order to evaluate what a

relatively cheap platform could provide in terms of performance and reliability for the

money. The controllers from Areca were the 8-port ARC-1220 and the 16 port

ARC-1261ML. The controllers from 3ware were the 8-port and 16 port models based on the

9650SE.

Besides the disk controllers, variables tested included: RAID type, number of disks in the

RAID, 4 popular Linux filesystems (Ext3, Reiserfs, JFS, and XFS), filesystem

initialization, RAM included on the card, effect of system RAM, and readahead size. These

were tested using 4 application benchmarks: the Bonnie++ and IOZONE disk

benchmarking suites, the netCDF operator utility ncecat, and the Linux 2.6.21.3 kernel

compile.

All the cards performed very well - bandwidth on large writes on a 16-disk RAID6 array was

measured at >2GB/s on a large memory system and up to ~800MB/s on a RAM-constrained

system. Large reads were slower, reflecting the ability of writes to be partially cached,

but were still measured at up to 570MB/s on a 16-disk RAID6. Small reads and writes were

significantly slower, ( ~60MB/s) reflecting the seek overhead in complicated read/write

patterns. Not surprisingly, the 16-port cards were both more cost-effective and had

higher performance. There was no clear winner between 3ware and Areca - each had areas

of slightly better performance.

The filesystems all performed well, but the JFS and XFS filesystems performed best on

large disk IO and did very well in the other tests as well. JFS and XFS both initialized

even 7.5TB filesystems essentially instantaneously. Reiserfs was best under a variety of

conditions on small-file, I/O intensive operations as represented by the kernel compile

test. The Ext3 filesystem uses the same on-disk structure as the non-journaling ext2

filesystem, and thus requires initialization time proportional to the RAID size, ~17m for

a 3.5TB RAID5 filesystem. It also performed worst on large data reads and writes and

so would be a poor choice for a data volume.

The variable that contributed most to better performance was amount of system RAM. The

more motherboard RAM your storage device has, the faster it will perform in almost all

circumstances.

2. Introduction & Rationale

Digital information storage is increasingly important at all institutions; univerities are

no exception. Experimental equipment generates increasing amounts of digital data, social

sciences increasingly rely on digital archives, and many researchers in all fields are

analyzing large digital archvies as primary data sources. Academic research therfore is

in the forefront in requiring more storage and better ways of dealing with it. Remote

sensing streams, gene expression data, medical imaging, and simulation intermediates are

now easily ranging into the 10s, 100s, and often into the 1000s of GBs. As well, class

work, lab notes, administrative documents, and generic digital multimedia contribute to

the digital flood. Email is a particular concern as many people are using it as their

primary work log and therefore keeping it available over long periods of time is essential

for tracking research development, primacy, and intellectual property.

Some of this data is reproducible at low cost; some is "once in a lifetime". Other data is

extremely valuable either because of the cost of (re)producing it or it deals with sensitive

financial or medical records. This proposal does not address the storage of legally binding

documents of the highest sensitivity and security. There are commercial vendors who supply such

technologies and they are typically 4-8X more expensive than the storage that

we address. For example, the Network

Appliance FAS270 is a comparably sized storage device that costs in the low $40K range, compared

to the just under $10K for this device. We address the storage in the pretty spot of this

terrain: pretty cheap, pretty secure, pretty available, pretty fast, pretty accessible, pretty

flexible. The plan is to use these devices as building blocks of a larger infrastructure, and

because it is also a fairly accurate industry term, we are calling the device described here a

BRICK.

Not only is data size increasing but people are communicating this data to their colleagues at

increasing rates. Typically this is done via email attachments but there is some evidence that

researchers are using URLs to pass pointers to data as opposed to the data itself. NACS has a

charge to see that this is done securely, easily, quickly, with generous allocations as to

bandwidth and storage limits. One of the ways to do this is to match storage demands from

schools with local bricks that are still maintained by NACS. They could be co-located in

remote server closets and managed by NACS, the local administrators or a combination of the

two.

2.1. The Storage Brick

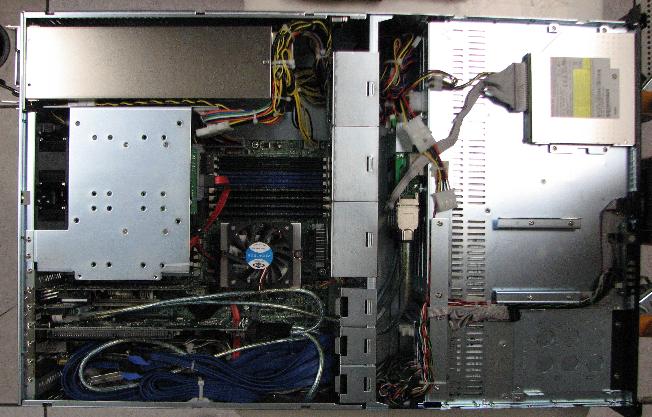

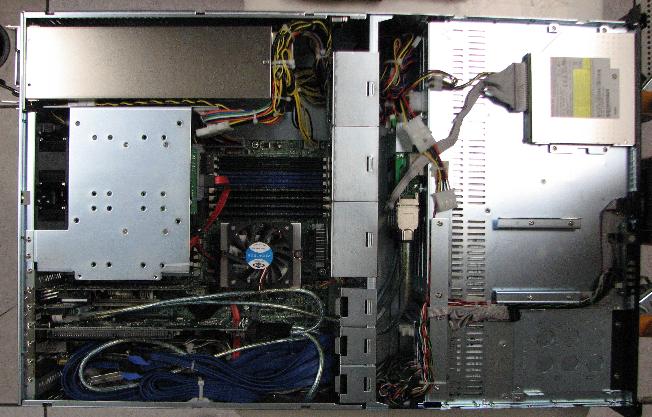

The basic unit of this test is the Storage Brick Images below),

described here. The

purchased test version is smaller than those

described: a rack-mountable 3U chassis containing a motherboard, redundant power

supplies, multiple ethernet interfaces, 8GB RAM, 4 Opteron Cores, and 16 hot-swap

SATAII slots. These slots allow disks to be pulled from a running system for

replacement. The system does not need to be brought down and the data remains

accessible during this replacement due to parity striped data on the other disks (in

RAID5 or RAID6). While these slots could be populated by any capacity disks up to

1TB each (the largest disk commercially available now) in this configuration, we

used Seagate 500GB disks, the most cost-effective disks when I specified the

system. This configuration provides 8TB of raw disk or RAID0 (striped data), 7.5TB

of RAID5 (1 parity disk), 6TB of RAID6 (2 parity disks), or 4TB of RAID10 (striped

and mirrored data)

The three redundant power supplies allow for the failure of 1 of the 3 to fail without the system

becoming inoperable. Power supplies are the second most likely point of failure on a system.

Table: The Storage Brick Device

|

Description

|

Image

|

|

front view of Storage Brick, sled out

|

|

|

top view of hot swap disk sled

|

|

|

top view of Storage Brick

|

|

|

back view of Storage Brick

|

|

3. Primary Variables Tested

3.1. The Disk Controllers

For data to be written to and retrieved from a storage device, the disks need to be coordinated

by a disk controller, which presents the storage available on the disks to the operating system.

There are a huge variety of controllers available, but for this test, I chose true hardware RAID

controllers rather than the dumb, cheap controllers (aka

fake RAID controllers) that are typically used

for Desktop machines. Such controllers use the main CPU to do the computations for placing

the data on the disk and for most situations, this is fine, as the CPU of a Desktop machine is

usually idle. On a server machine, this is often not the case, so efficiency is more crucial,

especially when the disk controller is responsible for many disks, and when it also have to

perform the parity calculations to spread the data across the disk array, as is the case with

RAID5 and RAID6.

Table: Hardware RAID controllers Used

|

Description

|

Image

|

|

3ware 9650SE 8port multilane PCI-e controller & battery

|

|

|

3ware 9650SE 16port multilane PCI-e controller w/o battery

|

|

|

Areca 8port PCI-e controller & battery

|

|

|

Areca 16port multilane PCI-e controller & battery

|

|

An 8-port and a 16-port hardware PCI-e RAID controller from both

Areca (a recent Taiwanese manufacturer of high

performance controllers) and 3ware (an older

American manufacturer of RAID controllers, which has contributed patches

and code to the Linux kernel for several years) were tested under 64-bit

Linux kernel 2.6.20-15 (Ubuntu Feisty). The 3ware controllers and the

16port Areca controller were multilane which use Infiniband-like

connectors that integrate 4 SATAII cables into 1 for easier-to-cable,

more secure connections.

Both Areca and 3ware provide for battery backup of the RAM cache so that if

power fails and the data cannot be sync'ed to the disks, it will remain in the

card cache until power is restored. Note that the battery for the 3ware

controllers is integrated onto the card while the battery for the Areca

requires an separate slot. Only the Areca 16 port card allowed for an upgrade

of the on-card RAM to a maximum of 2GB. The rest supplied 256MB of soldered

cache RAM.

The Areca device drivers are just now being incorporated into the mainline

kernel (2.6.19 and later) and are being patched into previous kernels by

almost all Linux distribution vendors. 3ware has some advantage because

unlike Areca, its controllers are supported by the

SMART monitor daemon that can peek

into the

SMART

data of the individual disks behind the controller to check temperature,

recorded errors, etc. The ability to detect SMART errors from the individual

disks is an advantage for trying to see if a disk is about to fail before the

fact. Recently published were 2 analyses of large numbers of disk failures

(one from Google,

the other from CMU) that have

tried to evaluate predictive failure of disks. They found that detecting

SMART errors have been useful in predicting disk failure, although far from

absolute. A good review of both studies is

Rik

Farrow's editorial in Usenix.

3.2. The RAID type

I tested RAID0 (striping data over multiple disks for increased performance without redundancy),

RAID10, (RAID0 with mirroring), RAID5 (striping data over multiple disks with 1 parity disk),

RAID6 (like RAID5 but with 2 parity disks).

3.3. Number of disks in the RAID

I tested the effect of how much performance was gained by increasing

the number of disks in an array for RAIDs 0, 5, and 6.

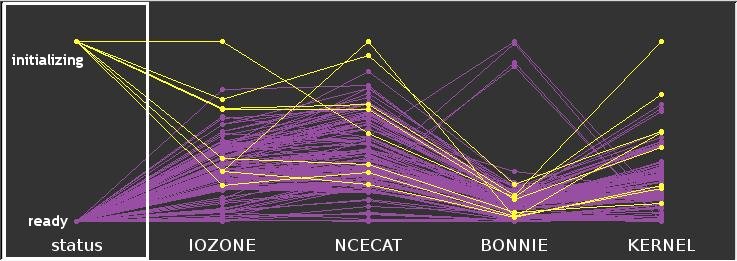

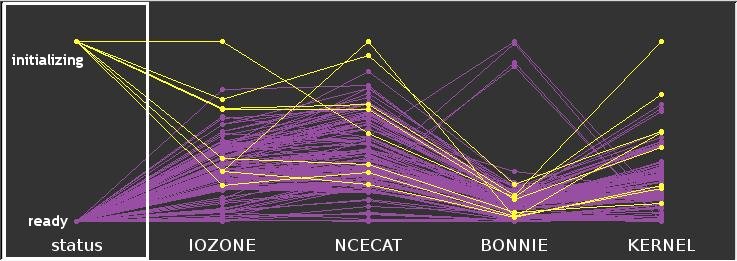

3.4. Initialization performance

I tested how much performance was effected by performing the tests while the RAID5 and RAID6

arrays were being initialized as well as when they had finished. Initializing a large RAID can

take hours if not days to initialize. This tested the performance on an array as it was being

initialized by the controller.

3.5. The amount of Controller memory

Areca provided a 2GB DIMM to replace the 256MB DIMM that the controller uses as a cache.

3.6. The amount of System memory

I tested under conditions of 0.5 GB of RAM and the full 8GB of RAM. Since Linux intelligently caches file input and output, increasing the amount of RAM can have a dramatic effect on disk IO.

3.7. File systems

While the controller is responsible for making the raw disk storage available to the Operating

System, there is another level of organization required before the data can be used. The raw

disk storage must be organized into a file system. This is the structure that allows directories

to be made, data to be stored as files that often have to cross raw device sectors. This

structure allows the dates of file creation, modification, access, etc to be associated with a

file, allows files to be renamed, copied, moved, etc. Depending on what the storage is going to

be used for, the type of file system can have a large effect on the overall performance of the

entire system.

I tested 4 of the most popular Linux filesytems:

-

Ext3 - the journaling version of the long-in-the-tooth, but very reliable ext2 filesystem. Because it is the ext2 filesystem on disk, with journaling added on top, compared to the others which are native journaling filesystems, it takes a very long time to initialize the filesystems - 3 orders of magnitude slower vs XFS on the 3.5 TB filesystem.

-

JFS - the 64-bit, IBM-contributed, journaling filesystem originally developed for AIX, which supports extents.

-

Reiserfs - Hans Reiser's 3rd version of his file system which has some unusual features like tail-packing (storing the ends of multiple files into the same sector to increase storage efficiency with small files)

-

XFS - SGI's journaling filesystem derived from their long experience with high performance, large-data supercomputing applications. XFS was explicitly designed to support extents (eXtents File System).

3.8. Readahead size

Readahead is the amount of data pre-read into the disk's on-board memory on the theory that if an application wants some data from one file, it probably want more of it. A useful document from 3ware has implicated readahead in increasing disk performance so it was tested in doubling strides from 256B to 32KB.

3.9. Application testing

I used both some well known benchmarks as well as real-life applications:

-

http://www.coker.com.au/bonnie++/[bonnie++] is a benchmark suite that is aimed at performing a number of simple tests of hard drive and file system performance. Very simple to use.

-

IOZONE is a fairly comprehensive disk benchmark suite that generates and measures a wide array of IO tests including: read, write, re-read, re-write, read backwards, read strided, fread, fwrite, random read, pread, mmap, aio_read, and aio_write.

-

Linux kernel compile with parallel make to generate the 2.6.21.3 kernel and most of the modules.

-

ncecat, a utility from the NCO suite that reads and rearranges netCDF files using GB-sized reads and writes.

4. Results

4.1. Individual Tests

4.1.1. KERNEL

The kernel compile test had small differences in time depending on

system RAM. With restricted RAM, but the 8G case was notably

faster. Also notable was that with 8G RAM, ext3 did considerably

better than in a restricted RAM environment. Also notable was that

with more RAM, the RAIDs with more spindles did better. Oddly

enough, with restricted RAM, the lower spindle count RAIDs did

better.

While XFS also recorded the top time in the restricted RAM Kernel

compile test (572s), ReiserFS took 14 of the top 20 times recorded (in

differet conditions), while JFS and Ext3 were only represented 2

times. The range of the top 20 times was fairly wide at 661+/-9.7 s

with a range of 572-696s.

Values from this query here

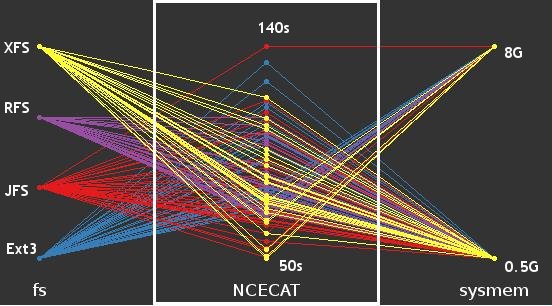

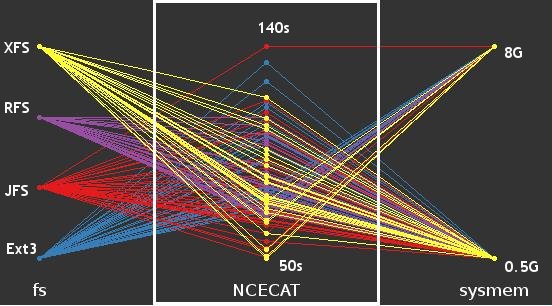

4.1.2. NCECAT

This program, a part of the NCO suite, is specialized to read and concatenate

large swaths of binary data from

netCDF files efficiently.

As such it is no surprise that a large readahead would increase performance.

It was something of a surprise that increasing the readahead beyond 8Kb

in many cases did not lead to further increases.

With 0.5GB system RAM, JFS accounted for 11 of the fastest 20 times,

followed by 6 for ReiserFS and then 3 for XFS and 1 for ext3. While the top

2 times were recorded with the Areca 16port card on RAID6, the remaining 18

were on RAID0, with 15 of those using 3ware cards. With restricted RAM, the

average was 70.7+/-1s with a range of 58-76s.

In the same test with 8GB system RAM, the best time was 50s with an average

of 62.3+/-1.6s and a range of 50-70s. The distribution of filesystems

represented changed as well, with XFS taking 6 places including 3 of the top 5.

JFS took 10 of the top 20 and ReiserFS took the the last 4 places of the top

20. Also notable was that all of the top 20 places were done with readahead

values of 8k or larger whereas with restricted system RAM, there was a wider

spread of values from very large to very small, although the data skewed large.

Areca took the 4 fastest places but 3ware took 14 of the top places.

It was surprising that the fastest performance for this test was under RAID6

(on the Areca 16 port card) rather than RAID0, proving you can have data redundancy as

well as high performance. In both high and low system memory tests, an

Areca card with RAID6 provided the fastest times with both XFS and JFS.

Contrary to a common belief, increasing the number of spindles from 8 to 16 had

little effect on the performance under RAID5/6.

Values from this query here

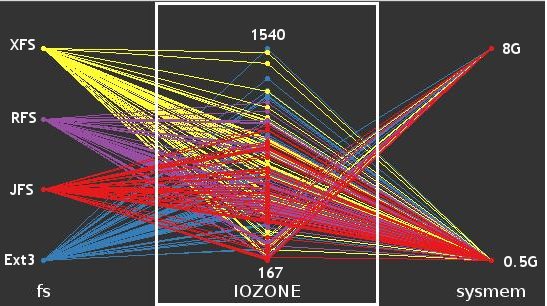

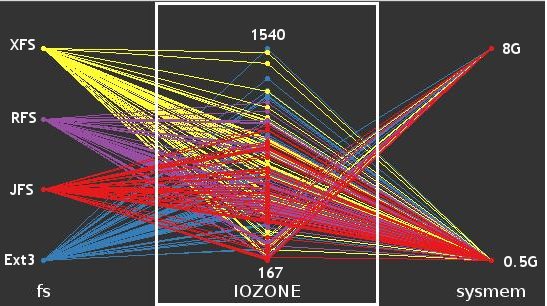

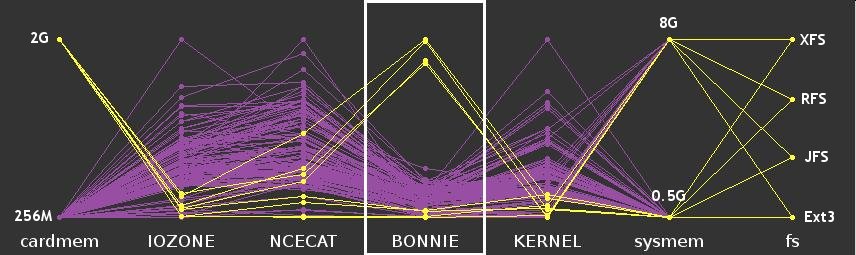

4.1.3. IOZONE

The IOZONE test does a mixed test of many kinds of disk access, with

variable numbers of threads accessing the disk at once. The time to

complete it therefore can be considered a proxy for mixed disk use,

although not a good proxy for a particular storage requirement.

Because of the complexity of the individual tests, it is difficult to

draw a single conclusion from each run and therefore I've used the run

time of the entire test as a crude summary. Once settled on a

particular set of parameters, it would help to use IOzone's supplied

gnuplot dataviewer Generate_Graphs to examine all the parameters of

interest in interactive 3D. See below for obtaining the raw data.

In top 20 results for this test, the JFS filesystem appeared 9 times

including all top 5 times. XFS was represented 6 times and ReiserFS, 5

times. 19 of the 20 best times were obtained using the full 8GB system

RAM, and the one time noted by the lower system RAM was attained with

the 2GB DIMM card option. Half of the top times were attained using 8K

Readahead values. RAID0 was used in 15 of the top times, with RAID6

making up 4 of the rest. In 17 of the top 20 times, the card used was the

Areca 16 port card; it was noted that of the 3 times the 3ware card was

used, it was with 8 disks instead of 16.

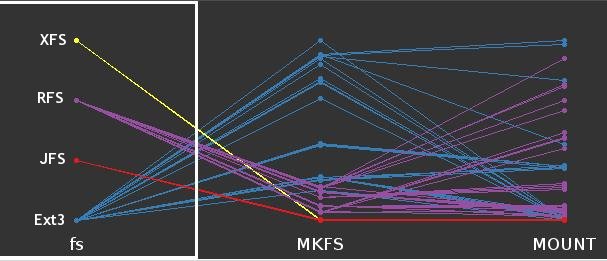

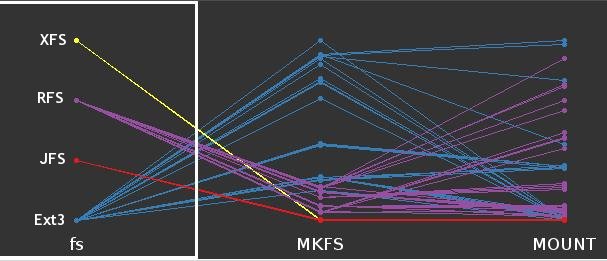

4.1.4. MKFS

While not a critical component for most storage applications, the making of

the filesystem on the disk device distinguishes a modern journaling

filesystem from an older one which is dependent on the on-disk writing of

the block and inode information. In the cases of the 4 filesystems tested

here, XFS initialized almost instantaneously (see above), regardless of the size of the

RAID, followed closely by JFS. On the 3.5TB filesystem, Reiserfs took about

10x as long as JFS and Ext3 took about 10X longer than Reiserfs.

For XFS and JFS, there was no effective difference across RAID types or

numbers of disks. Reiserfs and Ext3 took time proportional to the RAID size

(so that RAID0 took ~1/2 the time that RAID5 did for the same number of

disks). This mkfs speed would be very useful if you had to bring up a

large filesystem quickly in order to provide emergency storage. It also is

reassuring to know that among the tested filesystems, the two that

initialized most quickly were the ones which were usually the highest

performance.

4.1.5. MOUNT

Mount times are also a vanishingly tiny part of the lifetime of a storage

system, but since it was a part of the test procedure, I'll mention that

XFS and JFS mounted instantly, regardless of the size or type of

the RAID. RFS and Ext3 took longer and also took highly variable amounts

of time to mount the array, in the worst case, ranging from 5-140s to

mount the largest 7.5TB array (see above)

4.2. Parameters

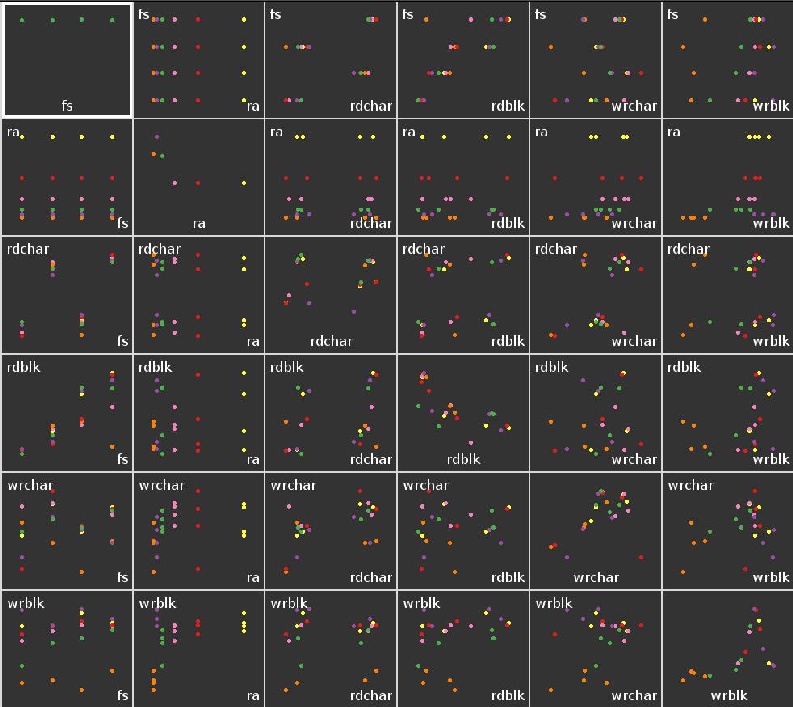

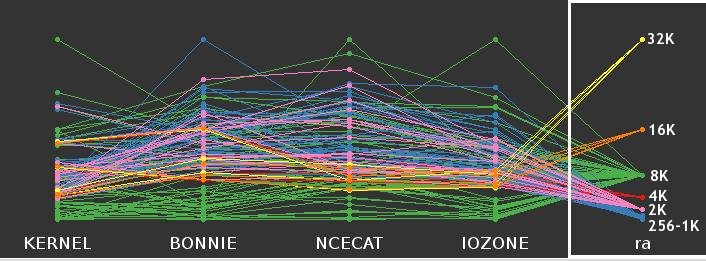

4.2.1. Readahead

Setting readahead to even the lowest levels above zero increased performance very slightly, and

increased read repeatability considerably. Since it's a freebie, it makes sense to increase

it from the default 256B on most linux systems to at least 4096B for just about any application.

However, setting it higher than 8096 rarely had positive effects on large file reads such as

the NCO processing, and had a negative impact on writing both character and block writes,

regardless of other parameters.

The NCECAT test was the only time when readahead had a significant effect on

the execution speed, when setting it to 8K led to a 30% increase in speed

(see below). This implies that large streaming reads could benefit

significantly from setting readhead to 8k. However, increasing readahead

beyond 8k led to a DECREASE in performance in most cases, contrary to the

results cited in the 3ware whitepaper.

Depending on filesystem type and the type of read, in the bonnie++ tests,

increasing readahead either had no effect or a very slight positive

effect. The only case in which there was a noticeable positive trend was

with Reiserfs in RAID5 with 16 disks, but that case had fairly poor

performance to begin with for some reason. There was a broad peak in speed

when readahead was about 1K-8K, however Increasing readahead over 1024B

also led to decreases in write speeds. As such there seeems to be no

reason to increase readahead above 8K.

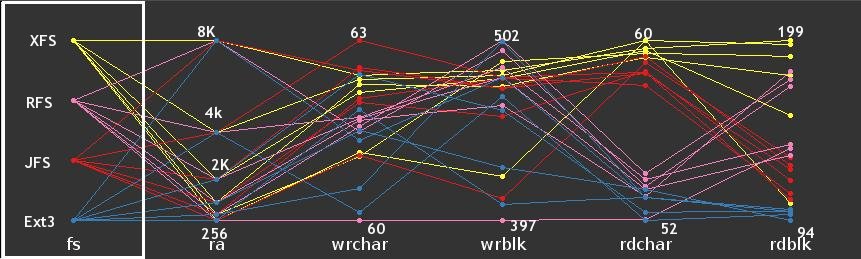

4.2.2. FileSystems

|

Note

|

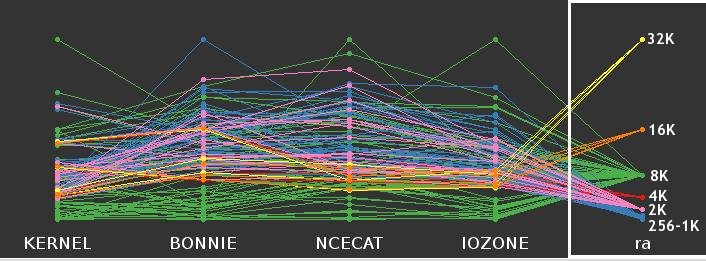

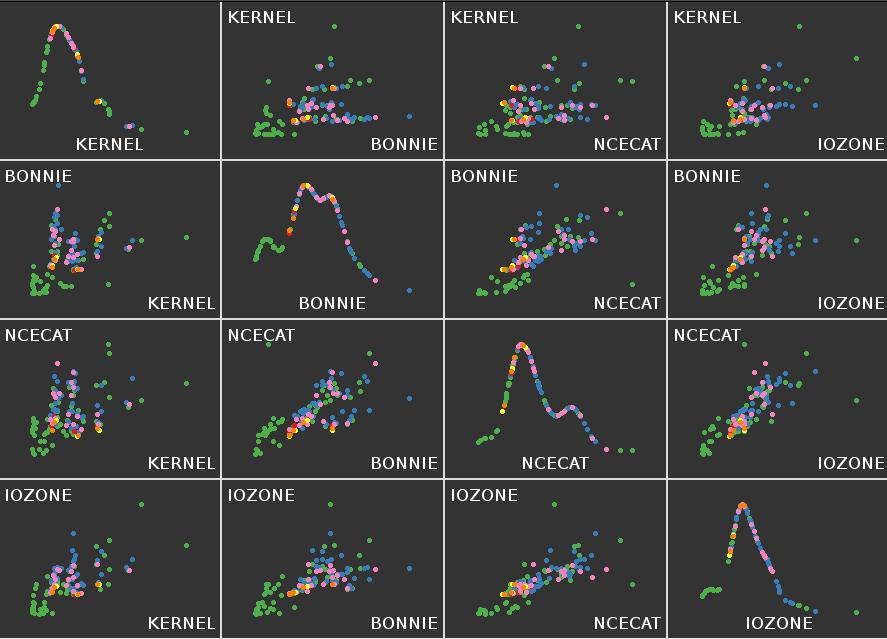

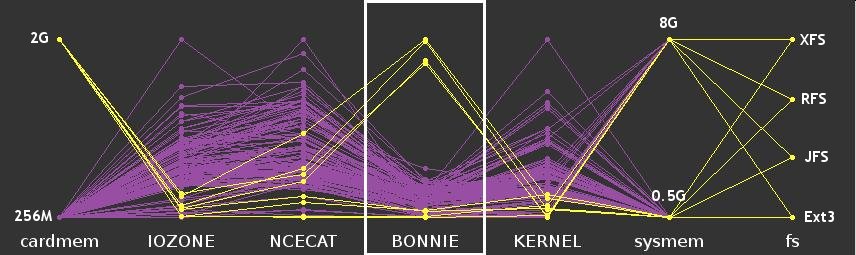

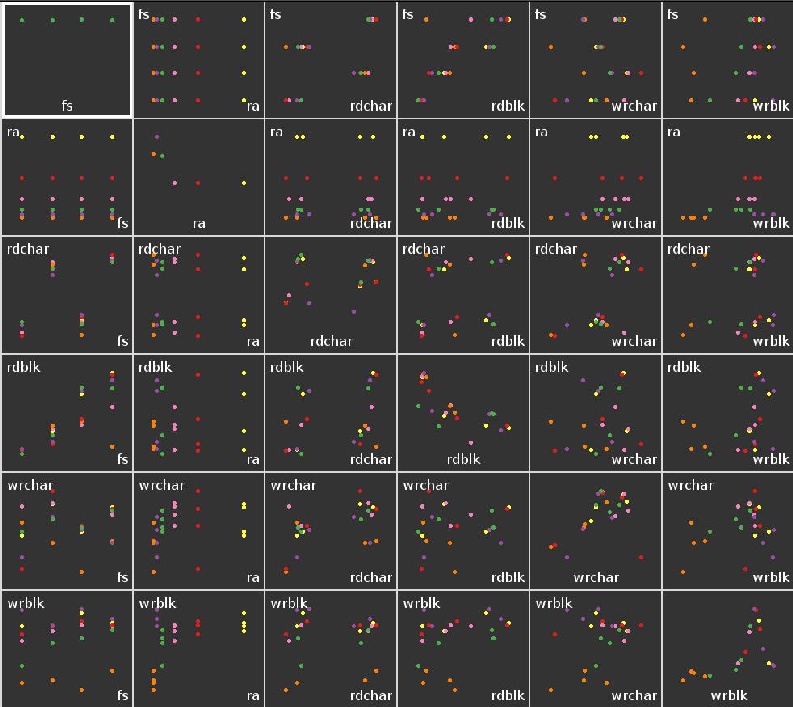

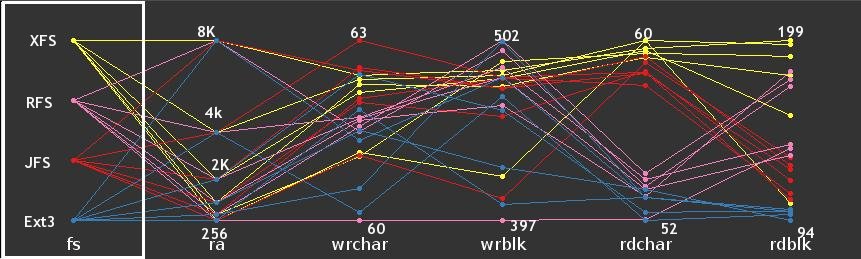

There are a number of Parallel Coordinate graphs of the type below. These graphs allow the

simultaneous visualization of multiple variables. A single line in the graph below corresponds to a record or row number in a table. A decent introduction can be found here although this graph was generated using the R statistical language and ggobi. |

Considering ALL combinations of parameters, it is revealing that of the top 20

values recorded in the Bonnie++ CHARACTER reads, XFS was fastest 17 times,

with JFS accounting for the other 3 times (including the fastest at

61MB/s), but the top 20 times were grouped very tightly (60.4+/-0.053 MB/s).

Values from this query here

For the 20 fastest BLOCK reads, XFS was the fastest in all of them,

topping out at 688MB/s. Unless otherwise specified, there was an

approximately equal distribution of RAID versions, cards, and readahead

values.

Values from this query here

For disk writes, Reiserfs contributed 7 of the top 20 fastest CHARACTER

writes, equalled by 7 for ext3, 5 for JFS, and only 1 for XFS. This was a

rare case where XFS was not among the fastest, but the speeds were so closely

grouped that it was largely irrelevant.

Values from this query here

For BLOCK writes, there are were 2 cases, one with the full 8G system memory

enabled, and the other with only 0.5GB enabled. In the first case, ext3 was

the fastest at 2.12 GB/s and the rest of the top 20 being represented jfs:

11, ext3: 5, ReiserFS: 4, with no representation by XFS. The variation was

very low.

Values from this query here

In the case where the system RAM was restricted to 0.5GB RAM, the max speed

corresponded to XFS at 810MB/s and it recorded 8 of the top 20 times, with

JFS taking 9 and ext3 taking 3. Reiserfs was not represented. The average

top speed was 785+/-3.8 MB/s. All top 20 times were recorded on Areca 16

port card running RAID5. In fact in the top 50 times recorded, the Areca

16 port card was represented 47 times and all with RAID5 or 6. The other

difference noted between the 8G and 0.5G cases was that the top 20 values

recorded with the 8G case average had readahead values that averaged 6016+/-769

where the corresponding case with 0.5G were recorded with an average

readahead of 2073+/-552.

Values from this query here

Overall, ReiserFS tended to do almost as well as XFS in block writes and

reads, altho its performance in character operations was almost as bad as

ext3.

JFS also performed well overall, especially with readaheads above 4K, but

it was very poor in block reads.

Ext3 was the oldest filesystem represented and was in general the slowest, although its

write performance was not far below the average.

-

XFS In the large file reads and writes, XFS performed about as well as JFS. With the full

8GB RAM enabled, the system was able to write 2GB files at more than 2GB/s, a truly phenomenal

rate. Reading was slower at ~600MB/s, but still impressive. Obviously, the write speed was a

function of the file cache (since the theoretical maximum would be about 1GB/s, based on

aggregate single disk write speeds), but even with only 512MB RAM enabled, it was still able to

write at about 800MB/s and read at 570MB/s on block operations using the full 16 disks in RAID6.

All filesystems were much less impressive when dealing with smaller files

reading and writing at close to single device speeds of 60MB/s. However,

overall XFS tied with JFS for best performance on the bonnie++ tests for

providing consistent small reads.

Because it is not bound by an underlying non-journaling architecture like Ext3, initializing XFS

was almost instantaneous (<3s) on every RAID size I tried (up to 8TB). This is not quite as

dramatic an advantage as the number would suggest (being 1000x faster at initialization is

vanishingly small advantage over the lifetime of a storage server), but it certainly made things

nicer for me. This would be useful in an emergency if a large storage server had to be

provisioned and brought on line quickly. There was never a failure that I could pin on XFS during

my benchmarks.

-

Reiserfs also initializes quickly, but slower than XFS and JFS, and works very well. It is

a good choice for a journaling file system that will be used on small files such as some mail

systems or home directories due to it's ability to squeeze more space from its tail-packing. It

excelled at small, numerous writes and reads as can be seen in its superior performance in the

kernel compile. A sad sidebar is that the follow-on

Reiser4, which has some strong technical advantages will

probably not be widely adopted because although it has been released, it has not been accepted

into the mainline kernel and the technical lead is in unrelated

deep legal trouble.

-

Ext3 The old reliable. There are more utilities to assist with ext2/Ext3

filesystems than the others combined. For small file IO, it works very well. For

larger file IO, its performance degrades somewhat, but not tremendously so. Large

file performance is dependent on more system RAM caching, but it is quite remarkable

that such old technology stands up so well. The next version, the

ext4

filesystem is in testing now and performs better than Ext3 in most regards. It would

be hard to recommend a filesystem other than Ext3 for a boot disk or small system that

needs to be be as reliable as possible. That said, I did record 1 failure with ext3

under the kernel compile test with the 3ware card.

-

JFS Also very fast to initialize (slightly slower than XFS) and works very well.

There were 2 instances when benchmarks failed due to JFS failing when running under the Areca

controller (the OS continued to run, but the benchmarks failed and the JFS partition was

unresponsive; I eventually had to reboot to bring back the device). This only happened under

heavy load, and only with the JFS testing. It is certainly not enough for a statistical

evaluation, but it made me uneasy. Otherwise it ran extremely well. During the ncecat test, JFS

managed the fastest times except when the readahead was set to 8K; then it was narrowly beaten by

the XFS time. It is also notable that it seems to be more consistent in its good performance.

While XFS and JFS are comparable at the top end, there are more situations where XFS records

much slower times as well as faster times.

4.2.3. Effect of number of disks in RAID

There was a noticable increase in block IO speeds when more disks were used up to about 8 disks,

but no real difference when doing random, small reads and writes. There was a small (~15%) but

significant increase in speed in the kernel compile when the # of spindles was increased from 8

to 12, altho raising them to 16 did not increase the speed further and increasing the readahead

did not increase speed either. For Ext3, more spindles and smaller readaheads yielded the

fastest kernel compiles.

4.2.4. More RAM on card

When the 2GB DIMM was used on the Areca card instead of the initial 256M DIMM,

there was no effect when the kernel had the full 8G RAM to use as a file

cache. When the system RAM was held at 512M, it had an overall positive

effect. It also had a slight positive effect in the IOzone and NCECAT tests

when system memory was contrained. However, in 4 cases in the Bonnie++

tests, the extra RAM was associated with a very large performance hit under

both high and low system RAM conditions and all four filesystems (see above).

In any case, it's hard to justify putting that RAM on the controller card when it

could be used more effectively in an Operating System context. The only reason

to do so would be to shield it for controller use when the rest of the system RAM

was being saturated or to otherwise improve system performance on an otherwise

un-upgradeable system. In these situations it would provide for a fairly cheap

upgrade.

4.2.5. Performance during RAID initialization

The initialization phase of a large RAID5 or RAID6 is a process that can take many

hours. The tests completed normally albeit somewhat more slowly than usual,

although the Bonnie++ tests completed in what could be considered a normal

time. This implies that you can start using an initializing array for data

storage, which can be of some use if there is a time crunch and especially there is

prep work to be done before the data needs to be protected by the fully

initialized RAID. I would not expect that an initializing RAID could protect

against data loss if a disk failed.

4.2.6. Support

Although I did not have reason to request it this time (all 4 cards worked nearly flawlessly), in

previous years, I've had reason to request technical assistance from both Areca and 3ware.

Retrospectively, the 3ware human support was very good; the Areca, less so. Both companies would

benefit from putting their entire support email online. Areca seems to have done this better

than 3ware, so the lack of human support from Areca was less of a problem as more of their

support documentation was available via Google.

Overall, I marginally preferred using the 3ware controller, due to its better support in Linux

(especially the SMART data access that can be obtained from the SMARTMON tools). All the cards

are astonishingling fast and the results from testing with large data IO is particularly

striking.

5. Conclusions

5.1. RAID size

Because of the loss of disk space due to parity (one for R5; two disks for R6), the more disks in

a R5 or R6, the less overall storage is lost the larger a RAID is. Further, because of the

enormous bandwidth of a PCIe backplane, there is no loss of throughput in large RAIDs due to the

number of disk channels up to the 16 ports I tested. Therefore, unless there are specific

reasons to use multiple smaller controllers, I recommend using the largest controller possible

and the largest possible RAID configuration, as more spindles correlate with increased

performance.

5.2. File System

The choice of file system depends on the primary use. To my surprise, the actual performance on

identical hardware was not as different as I thought it would be. I would not hesitate to use

Ext3 for a small boot/root file system. As mentioned, it is the default for most Linux systems

and for good reasons - it is extremely well-characterized. I would NOT use it for a large data

volume as the underlying file utilities would require regular, agonizingly long fscks. XFS or

JFS would be a better choice for a data volume and even for general purpose storage. JFS is

notable in that it matched the XFS performance and never degraded as XFS occassionally did.

Reiserfs is a good choice for a /home or

mailspool partition that requires lots of head movement, but it's hard to recommend it since JFS and XFS

are such good general purpose filesystems. I did not masure the space advantages that it might have conferred

due to its tail-packing feature, so on a space-constrained system it might have an advantage.

For all file servers, having lots of

extra RAM for file caching is a no-brainer plus, even moreso than higher-speed or multiple CPUs.

5.3. Preferred Controller

Whether the Areca controller or the 3ware controller is better, there is not a clear

winner. Both do most things very well. Both provide administrative access via reasonably

well-designed web servers. The 16-port Areca controller gives you a direct ethernet port

into a dedicated web server on the card itself which can be quite useful. The 3ware web

server is an additional daemon that needs to be set up to run on bootup. The 3ware also

gives you a commandline utility that allows terminal control of all 3ware controllers in the

server - useful for remote monitoring over slow networks. The 2 failures with JFS occurred

when using the Areca controller, but it otherwise behaved admirably over the course of

multiple hardware changes and tests. The 3ware controller manages to package the battery in

the same slot as the controller itself which may be important in a space-restricted server.

Neither the 3ware nor the Areca gives useful interpretation of SMART data from its drives,

a strange failing for such high-end hardware, but the 3ware card is supported by smartctl

peek-thru to extract this data from the controller. Both the 8-port and the 16-port Areca

cards support RAID6, but only the 16-port 3ware card does. Both Areca and 3ware also sell

very high density controllers - up to 24 ports on a card, which could support 48 drives

on a relatively cheap 2-slot motherboard. Because of the theoretical bandwidth limit of

250MB/s per lane, 2 PCI-e 8-lane slots could support bandwidth up to 4GB/s, sufficient to

support 24-disk arrays, but probably pushing it for 48-disk arrays.

When I split the 16 disks into multiple RAIDS and then tried to access them, the Areca card

refused to allow access to them until I rebooted. The 3ware card worked every time in

showing me new /dev/sdX devices coresponding to the new RAID configurations. I suspect

that this is a function of the time that the companies have been supporting Linux. In real

life, this is not as annoying as it was during this testing. Most people will initialize a

particular RAID configuration and run it until the machine dies; they will not be changing

RAID configurations with the manic zeal I did.

6. Other Uses of the Storage Brick

Besides providing cheap storage capacity, the Storage Brick can also be configured for

significant computational ability. The

current Storage Brick

motherboard has two 1207 CPU sockets populated with dual-core CPUs. The recent

release of Quad-Core Opterons

would theoretically allow this motherboard to host 8 Opteron cores (as well as 32GB

of RAM). This provides a fairly capable compute node as well as large storage

capacity. Fully populating the system with RAM and CPUs is overkill simply for a

storage server; a low-end 32 bit system with 1GB of RAM would suffice to support the

pure storage operations. However, fully populating such a system provides

high-speed processing in addition to access to very large amounts of data. This maps

well to a number of institutional requirements such as mail service and database

servers, as well as research applications.

Since it can be equipped with dual and even quad-port Gigabit ethernet cards to supplement

the native 2 Gb ethernet ports, the Storage Brick can simultaneousy service multiple

networks to act as a backup or NAS file server. It is especially appropriate for a

backup service that does significant compression or de-duplication.

It's also worth mentioning that the Opteron, which uses the

AMD64 architecture can run simultaneous 64bit and

32bit applications (including mixed

Symmetric MultiProcessing

applications). Intel has also embraced this architecture (calling it x86_64) and recent

Intel CPUs have the same capability.

7. Further Testing

There's always something left out.

-

a relational database benchmark as a test case. I was going to use

SuperSmack, but ran out of time. There are others that

should be tried as well, especially the Database Test

Suite.

-

a Mail Server. I should have done a Postmark test as well (available as a Ubuntu

package.).

-

Testing Internal vs external journal.

-

other filesystems. I'd like to test Sun's apparently very well-designed

ZFS, as well as the new

Linux ext4 filesystem in the same framework. ZFS has

not yet been ported to Linux the linux kernel, although the process is continuing using

the FUSE userland approach, thanks to Sun having open-sourced the code. Recently NetApp

has sued Sun over some aspects of the ZFS, claiming patent violations. The ext4 filesystem

is available in the new Linux kernel as a development option, tho not yet recommended for

real use.

-

Logical Volume Managers. I would also like to test the Linux Logical Volume Managers

LVM2 and

EVMS against the ZFS volume manager as well.

Snapshotting is a very

attractive feature that all of them claim to have implemented. Perhaps next time..

Please let me know your priorites.

8. Appendix

8.1. Raw Data

The raw data from this analysis are available in 2 forms:

-

the original files which can be browsed here singly or downloaded in bulk as a 5MB tarball here.

-

the SQLite database into which I've parsed most of the data from the above files. The reason for the mismatching is that some of the data was gathered in the process of creating the script and so was not in a form amenable to being parsed easily. Those few files have been omitted. The SQLite database has 3 tables:

-

iozone - a large corpus (~6MB) of data from the iozone tests. Each iteration of the iozone test generates about 2400 values, which are indexed by all the parameters of the test, so the data grew quickly.

-

bonnie++ - much smaller than the iozone data - only contains ~ 14 values per run

-

other - the overall run times of the 5 different tests; extremely compact, at the cost of detail.

-

the overall SQLite schema can be seen here in plain text.

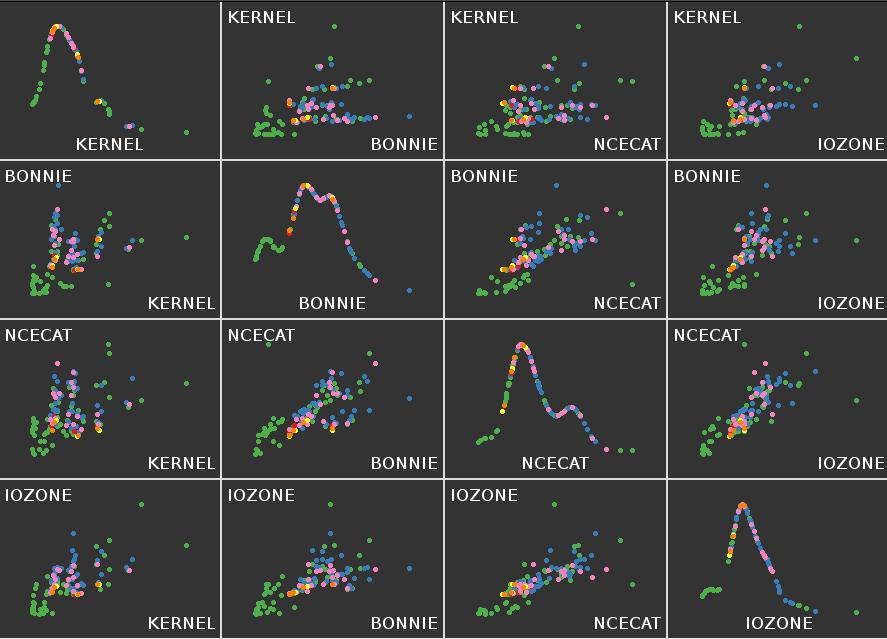

8.2. Using R & ggobi to visualize the data

The R language and ggobi are, respectively, free statistical and visualization systems that can be used to analyze and visualize multivariate data such as that provided in this study. While they can be used independently, they are also designed to work together - ggobi can be run from within R to visualize R dataframes (R's internal representation of a dataset, corresponding roughly to a table).

While R can directly query databases to provide dataframes (described in more detail here), I'll describe how to load external tables 1st, such as those created by an external database query or spreadsheet table.

Given such a table, named [other.16d.r5+6] for example:

card|ports|disks|rtype|ra|fs|MKFS|MOUNT|BONNIE|KERNEL|IOZONE|NCECAT|DBENCH

3ware|16|16|6|256|ext3|1507.12798|0.966668|158.819063|835.115628|905.026998|124.557138|0.0

areca|16|16|6|256|ext3|1754.609643|62.738649|218.925547|735.370265|729.249532|101.68025|0.0

areca|16|16|5|256|ext3|626.779672|104.035842|137.148965|705.861009|622.139284|0.0|148.997351

areca|16|16|5|256|ext3|1835.480147|135.926012|134.950901|730.114779|626.945034|0.0|150.213541

areca|16|16|5|256|ext3|1893.842949|81.943981|185.853155|727.25922|639.094527|0.0|0.0

etc

it's possible to slurp this into an R dataframe named df with the following command:

> df <- read.table("other.16d.r5+6",sep="|", header = TRUE)

# then load the ggobi library routines with:

> library("rggobi")

# then load the R dataframe into a ggobi object 'ggo' and launch ggobi with :

> ggo <- ggobi(df)

Once launched you can use ggobi to interactively examine, scale, brush,

identify, and plot in 1,2, and 3 dimensions. Following is a screenshot

of a ggobi 3D plot, alowing instant selection of 3 variables out of all

possible ones. This makes it very easy to quickly tour data relationships.

More information about using R and ggobi together is

available as a PDF here.

8.3. Software used in this study

This study was performed entirely using Open Source Software. The tests were conducted on

the Storage Brick running the Kubuntu Feisty 64bit Linux

distribution. The previously mentioned tests (Bonnie++, NCECAT, IOZONE, and of course

the kernel compile) are all Open Source, as are the filesystem implementations. In

analyzing the results, I used a combination of Perl scripts to parse

the output, and consolidated the extracted data in an SQLite

database. Once the data was in SQLite, I used sqlite3,

gnuplot, R, and

ggobi to extract, examine, analyze, and plot the data. The

gimp and krita were used to add

labels to the graphs where needed. The report itself was composed in plain text with

asciidoc format codes and formatted using the elegant

asciidoc. To see what it looks like, the asciidoc

source text is here.

The script that performs these tests fs_bm.pl is placed in the public domain

and is structured in a way that allows arbitrary tests to be embedded in the timing and

reporting structure.

9. Acknowledgements

I wish to thank Don Capps (creator of the IOZone) and Stuart Rackham (author of

asciidoc) for their help, as well as Richard Knott

at THINKCP for providing good advice about the initial

configuration of the Storage Brick, and for arranging the loan of the 16-port

3ware and Areca cards for this testing. Of course, thanks to Areca and 3ware

for providing the cards without requiring NDAs or other forms of blockage of

unflattering comment. Thanks to colleagues at NACS and UCI for their comments

and critiques, but mistakes are mine alone.

Thanks for reading this far.

Harry Mangalam