1. Introduction

In the following, Primary or 1°; refers to the system in the OITCD; Secondary or 2° refers to the backup system in either ICS or CalIT2.

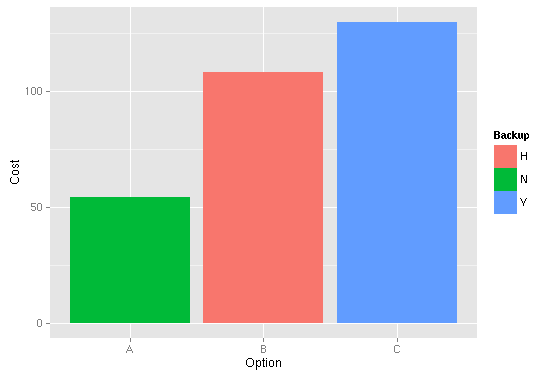

The Option labels (x-axis) refers to the the Option letters below. The bars are colored by whether the configuration is one with a Full Backup (Blue), No Backup (Green), or Half Backup (Red). The Y-axis shows the cost of each option in thousands of dollars.

| Backup Option | 1° Servers | 1° TB | 2° Servers | 2° TB | Cost | Spreadsheet |

|---|---|---|---|---|---|---|

A - None |

2 |

80 |

0 |

0 |

$50.5K |

|

B - Half |

2 |

80 |

1 |

40 |

$108.2K |

|

C - Full |

2 |

80 |

2 |

80 |

$129.9K |

Listed in increasing cost. Any Backup (aka replication) of the primary storage cluster incurs the cost of adding Single Mode Optical Fiber to the remote site and 2 x 10GbE switches to allow the 2 storage clusters to talk to each other on a private high-speed network, as well as the duplicated storage hardware.

2. Option A: 80TB Primary Storage, no Secondary Storage; ($50.5K)

-

2 storage nodes; w/ 80TB usable (2 bricks of 40TB each) ($29K)

-

2X bandwidth of a single chassis.

-

both storage nodes partially populated with disks (can expand to 240TB by adding only disks)

-

1 MetaData server ($6K)

-

2 I/O nodes ($10K)

-

5 GPFS server licenses and support ($5.5K)

-

FDR IB rack switch ($8K)

3. Option B: 80 Primary Storage, 40TB Secondary Storage; ($108.2K)

-

3 storage nodes; w/ 80TB usable (1° site: 2x 40TB ea; 2° site: 1x 40TB ea) ($43.5K)

-

2X bandwidth of a single chassis in 1° site.

-

storage nodes partially populated with disks (can expand to 240TB (1°) / 120TB (2°) by adding only disks)

-

1 MetaData server ($6K)

-

2 I/O nodes ($15K)

-

6 GPFS server licenses and support ($6.6K)

-

1 FDR IB rack switches ($8K)

-

2 10GbE switches ($14K)

-

install Single Mode Optical Fiber between OITDC and ICSDC

4. Option C: 80 Primary Storage, 80TB Secondary Storage; ($129.9K)

-

4 storage nodes; w/ 80TB usable (each site has 2 bricks of 40TB each) ($58K)

-

2X bandwidth of a single chassis.

-

both storage nodes partially populated with disks (can expand to 240TB by adding only disks)

-

2 MetaData servers ($12K)

-

3 I/O nodes ($15K)

-

9 GPFS server licenses and support ($9.9K)

-

2 FDR IB rack switches ($16K)

-

2 10GbE switches ($14K)

-

install Single Mode Optical Fiber between OITDC and ICSDC

5. My recommendation

In terms of progression, the best way of doing this is to install a Primary storage stack in the OITDC (Option A above), get the interfaces up and running to make sure it’s popular. Once the system has been shown to be useful and popular and there is a demand for recharge backup, then offer the Secondary/backup as an option. At that point there might be Departmental pressure to both provide it and to pay for it.

However, if we do provide a simultaneous 1° and 2° and there does not seem to be the institutional support for the 2° geo-replicate, we can easily merge the hardware back into the 1° storage system.

6. Abbreviations and Explanations:

GPFS = IBM’s General Parallel File System

FHGFS = Fraunhofer File System

MDS = MetaData Server, required for Fhgfs

IB = Infiniband

QDR = Quad data rate IB (41Gbs)

FDR = Fourteen data rate IB (54Gbs)

10GbE = 10Gb/s ethernet

SMF = Single Mode optical Fiber

MMF = Multi-mode optical Fiber

I/O = Input / Output

6.1. Generic Storage Servers

A Storage Server is a generic storage chassis with room for 36 disks each in this case. They can be incrementally populated in chunks of 12 disks each (a brick) to provide 40TB for each 12-disk chunk. Since they are generic, they can be substituted with other similar or larger storage chassis' as price fluctuates. We are not bound to any vendor or supplier.

6.2. Distributed Filesystems

Distributed filesystems can be increased in size by providing more storage bricks. Their aggregate bandwidth can be increased by adding more chassis'. ie adding another chassis allows I/O at 2X the max I/O to one, 3 chassis allows 3X the I/O of one chassis. Hence the reasoning to initially provide 2 underpopulated chassis' in Option A instead of filling the 1st.

6.3. I/O nodes

These are servers that are placed between the end clients (Macs and PC) and the main filesystem to provide I/O buffering and security isolation from clients. They export the main filesystem to the clients while maintaining some security to it. By manipulating the CIFS/Samba rules and NFS exports, we should be able to prevent end-users from wandering the filesystem beyond what they should.