UCI, like many UC campuses, is facing the dual squeeze of decreasing IT budgets, and increasing licensing fees for our institutional Backup Systems. We also are facing somewhat more requirements from clients as more data is being gathered or generated, analyzed, and archived. In view of these pressures, the Office of Information Technology (OIT) is evaluating what our requirements are, and what Backup solutions can be used to more economically address our needs. We are evaluating both Proprietary and Open Source Software (OSS) approaches and it may be that the optimal solution is a combination of the 2.

Any Backup approach is guided by at least 2 issues:

-

The value of the data (or the cost of replacing it).

-

The cost of backing it up.

Much of the most valuable institutional data is stored on high-cost, high-reliability, highly-secured central servers. This makes backup fairly easy and most such devices have inherent or included data redundancy or data protection, making decisions about what backup system to use much easier (in some cases, there is no choice at all since the proprietary nature of the storage allows only the vendor’s implementation).

The situation is somewhat different for a university, which brings in much of its support $ in the form of overhead on grants. Such faculty-initiated grants brought in ~$328M in external funding last year for UC Irvine alone. Those grants were being composed on and still reside substantially on personal Laptops and Desktops scattered around the campus. The vast majority of them are backed up sporadically, if at all. I’ve had personal experience in trying to rescue at least 5 grants that were lost to disk crashes or accidental deletion days before the submisison date. That is valuable data and it would be exceptionally useful to be able to provide backup services to such users, even disregarding the somewhat less critical primary data from their labs. At UCI, this includes about 400 faculty who write for external grants on a regular basis. Any Backup system should minimally cover these people and therefore the scalability and Mac/Windows client compatibility of any such system is quite important.

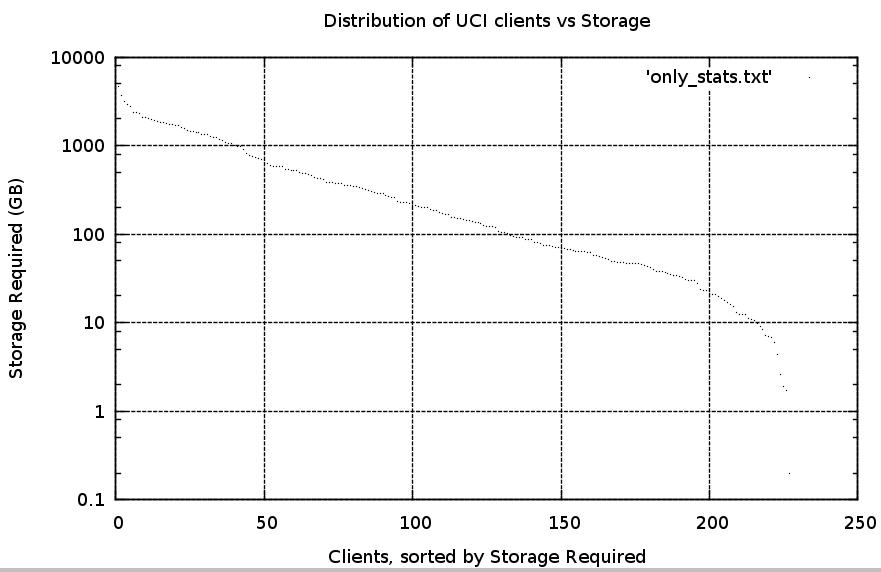

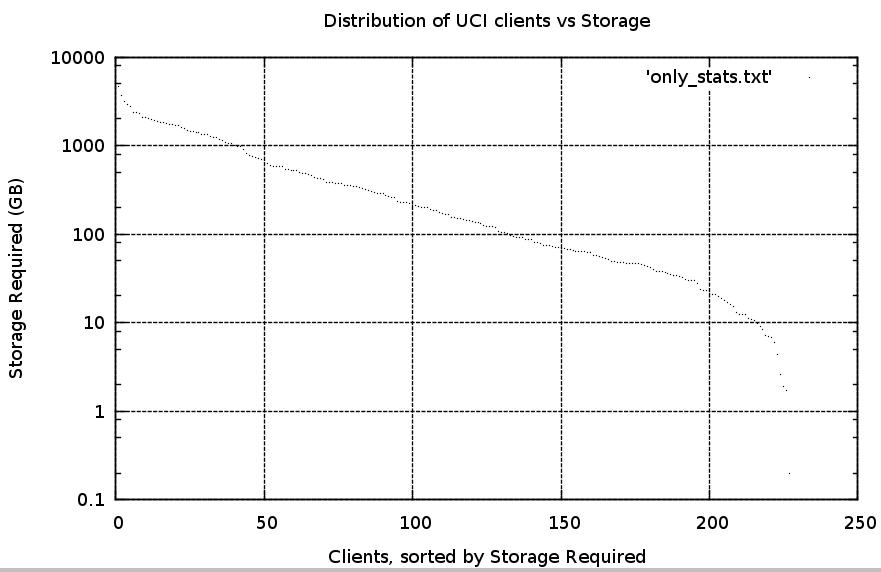

We currently use Networker to back up ~230 clients (mostly other servers) to 3 backup servers, with storage requirements as described graphically below:

Sum 109326.2 ........ total GB

Number 227 ............. # clients

Mean 481.61 .......... GB / client

Median 155.9 ........... "

Min 0.2 ............. GB on smallest client

Max 4651.2 .......... GB on largest client

Range 4651 ............ diff between 2 above

Variance 523643.27 ....... among all clients

Std_Dev 723.63 .......... "

SEM 48.02 ........... "

Skew 2.43 ............ "

Std_Skew 14.99 ........... "

Kurtosis 6.97 ............ "

We currently use about 1/4 of an FTE to administer the Networker system after setup.

The IAT recharge rates for this service is listed here, but in summary, it’s $20/mo/system plus $.39/GB for storage and a $40 charge for file restores.

Retrospect is a disk-only based system,

We currently pay $620/yr for this license and currently have 59 clients (18 Macs, rest Windows).

We currently charge $87.50/user/year with a 50GB limit on storage, with self-service file restores.

There are perhaps 50 OSS Backup systems, but most of them are too

limited in their features or maturity to be considered. Only 3 seem to rise to the

level of possibility, tho for different things:

Amanda, Bacula, and BackupPC share these characteristics:

-

All have Commercial support available now or imminently.

-

The OSS version is of course, free for unlimited number of servers and clients.

-

All can backup to disk.

-

All have Open Source versions (altho Amanda and Bacula have $ versions

that have additional, non-OSS goodies provided.

-

All can support MacOSX, Windows, *nix clients via some combination of

rsync, samba, semi-proprietary protocol (Bacula protocol is OSS,

but only Bacula uses it it)

-

All can support 10s-100s of clients per server.

-

None currently have support for the NDMP protocol (tho Bacula and Zmanda are planning it)

-

None support transparent Bare Metal Restore

-

None support duplicate on backup

-

None natively support Snapshots altho all can be implemented on Solaris to provide a number of ZFS features such as: snapshots, filesystem compression, slightly better I/O, etc. However, while Solaris is certainly stable, there are still aspects of ZFS that seem to be causing problems.

(To ease a transition from Linux to Solaris, Nexenta is a free distribution of Solaris packaged as Ubuntu).

4.1. Amanda/Zmanda

-

useful for separate instances of backup servers servicing sets of clients (star config)

-

store to tape or disk; can use Barcode writers, readers to generate, process labels

-

Amanda uses std unix text-based config files; seems to be more easily configurable than Bacula, tho less so than BackupPC (configured by Web GUI or text files)

-

very flexible backup scheduling mechanism

-

uses open & common storage protocols (tar, dump, gzip, compress, etc)

-

very secure by on-wire & and on-disk encryption

-

CLI or GUI available.

-

mature (16yr), extremely well-reviewed C++ source code. Zmanda says that they are re-writing it in Perl for easier maintenance and to encourage more external contributions.

-

very large user base

-

Zmanda extension for backing up MySQL.

-

uses own internal datastructures, so no additional DB instance required.

-

Zmanda-supplied technical PDF about current and near-future options (NDMP, others)

-

Commercial support costs are as described here.

4.2. Bacula

-

Commercial support available

-

store to tape or disk

-

allows multiple servers to service multiple clients simultaneously,

allowing a much larger single instance

-

uses SQLite, MySQL or PostgreSQL as DB (all well-supported & understood),

but additional complexity, and DB is therefore a critical link.

-

published storage code, but not as widely used as Amanda

-

Commercial support costs are as described here.

4.3. BackupPC

-

Zmanda will be providing commercial

support for BackupPC very soon, probably ~$10/client for large

academic institutions.

-

store only to disk, not tapes; therefore not useful for long-term

archiving, unless willing to buy appropriately large hardware.

-

support for client restores

-

UCI-written support for client self-registration.

-

rsync support depends on Perl implementation of rsync so it lags

the most recent rsync features by a few revisions.

-

supports file de-dupe via hard links, but hard links limit the storage

to 1 filesystem (but XFS, ext4 support up to >= 1-8 EB).

-

uses file tree, not DB, as the data structure; simpler but more primitive.

-

uses no proprietary or application-specific client software; therefore very simple to implement and robust for restores.

-

supports encrypted data transfer for MacOSX, Lin, but not (easily) for Windows (encryption requires use of ssh public key from server and ssh remote execution of windows executables).

4.4. Some Feature Comparisons

4.5. Crude Measures of popularity

Via Google-linking (a very crude measure; very sensitive to key words)

-

Amanda/Zmanda: 411,000 google links; 460 pages linking to zmanda.com; 252 linking to amanda.zmanda.com

-

Bacula: 402,000 google links; 468 pages linking to www.bacula.org.

-

BackupPC: 267,000 google links; 5,070 pages link to backuppc.sourceforge.net;

3,140 pages link to backuppc.sf.net

-

Vertitas: 255,000 for veritas backup software; 5,510 linking to http://www.symantec.com (all products)

-

Networker: 66,500 for EMC networker; 2,370 linking to emc.com (all products)

Google Trends indicates that the Search Volume Index is decreasing rapidly for

Veritas, is holding constant for EMC Networker, Bacula and BackupPC, but

Bacula is ~3x the value of BackupPC, which is itself 2x EMC Networker.

Veritas has decreased to just above BackupPC. Zmanda only started in 2006,

and does not have much of an index built up, but it show significant spikes

in News Reference Volume. "amanda backup" has been decreasing from 2004 and remains about 1/2 of BackupPC and 1/6 of Bacula.

I would like to see the automatic backup of all of our faculty’s Desktops/Laptops (\~1000) to shield against catastrophic loss of recent data, but I’m not sanguine about the chances for this due to funding issues, unless we go with a pure OSS solution, which would be considerably better than nothing at all.

5.1. Details

At 10GB per faculty, this would mean a storage server of ~20TB (now a medium-sized file server), and at 1% data changing per day, that means that on the order of 100GB a day would have to be transferred for incremental backups. At 7 MB/s (a decent transfer rate over 100Mb), transmission time is only ~4 hrs, easily done in a night, in parallel sessions. Measured on an Opteron backup server (doing server-side compression & file deduping via hardlinking), it takes about 25% of a CPU to handle 1 backup session, so a 4-core machine could theoretically handle ~16 simultaneous backups, if the bandwidth can supply it with enough data. Our test backup server is currently single-homed, but has 5 interfaces, so could easily be multi-homed. If we use OSS Backup software, it will cost \~$10K for hardware to provide 1000 very valuable PCs or laptops with at least protection against catastrophic loss.

Please feel free to expand on (or critique) these points.

6.1. Clients

-

what features of current clients are actually used? (Do you need a wiki or calendaring function in your backup software? - why pay for unused features?)

-

how many clients currently served?

-

OS distribution of clients

-

minimum backup cycle

-

how much data per client per cycle

-

do clients need to be able to initiate their own backups?

-

client email notification of problems or email just to admin?

-

mechanism of backup (rsync or propr.)

-

compressed on client or server (open or propr.)

-

client upgrades done from server, or client involvement

-

preferred client registration (self or admin registration?)

-

support of mobile client IP’s or static IP only?

-

local nets only or internet support (backup/restore in Europe?)

-

special application backups required? MS Exchange, RDBMSs, CMSs

-

does timing of backup have to be client-adjustable or just at night

or doesn’t matter?

-

typical client ethernet type and number of hops to server

-

need to support secure backup over wireless?

-

can open files be backed up? Win/Lin/Mac +

-

do you need to back up encrypted filesystems and if so, how many features will work with such filesystems?

-

are clients cluster aware? Can they back up to a cluster or one of a set of distributed servers? +

-

if the software backs up Windows servers, is it SharePoint-aware? Exchange-aware? +

-

if the software backs up Windows servers, can it do hot reinserts into Active Directory? For example, can it restore a single security group deleted from a domain controller or a single mailbox on an Exchange server? +

-

can users recover files themselves? If so, are they properly restricted to only recovering files they own? +

6.2. Server & Admin

-

what features of the server are actually used. (why pay for unused features?)

-

how many servers are required per 100 clients?

-

what is the FTE setup, configuration, and support requirements

for the server & each type of client?

-

do servers stage-to-disk before writing to tape? +

-

if multiple servers, are they synchronized or independent?

-

type of server required (OS, CPUs, RAM, used for other services?)

-

is there an institutional inability to deal with a particular platform?

-

how many net interfaces per server are typically being used?

-

data format on server (open, propr.)

-

storage (tape / tape robot, disk, hierarchical)

-

type of server admin interface used, preferred (native GUI, Web, CLI)

-

database, other support software required (OSS, propr.)

-

how are server upgrades done?

-

does a feature of the backup server require or obviate a specific filesystem type?

-

can you have (do you need) standby servers?

-

how much of the system can be tested at once to see if it fits our situation? Will the PS vendors allow us to set up a multi-server installation over the course of 3 months to verify their claims?

-

what is the complexity of the system? For a particular feature, is the complexity required to implement or support it worth the effort?

-

is the software database server-aware? For example, can it backup Oracle databases without having to take the databases offline? +

-

Does it support direct fiber/fiber-aware backups? +

6.3. Backup Protocol

-

what kinds of backups are required (disaster recovery, archival,

incremental, snapshots)

-

network protocol used, preferred

-

encryption requirements (client-side? server side?)

-

type of data compression used, preferred - format, block-level de-duping?

file-level de-duping?, with HW accel or just software?

-

is dedupe technology required? Versus just buying more storage media? If needed, what level is needed? (compression of individual files, hard links to files, proprietary dedupe technology, target or source-based dedupe, etc)

-

does the software deduplicate to tape? Or does it reconstitute the data, then write it to tape? +

-

does the de-duplication component (if the backup software has one) write both the deduplicated data and the de-duplicate table to physical tape? (This obviates the need to have the same backup server to reconstitute the data.) +

-

if using rsync, which version? Version 3x has significant advantages over 2x. =

|

Contributions:

|

6.4. Cost, Cost Efficiency, & Legal Concerns

-

what is the cost per user for the system?

-

what is the coverage of the various systems at different price points?

-

what is the tradeoff of moving up one curve and down another? (for example, the cost of using inefficient dedupe technology and compensating with more storage, vs using efficient, but proprietary dedupe technology)

-

do the constraints on the variants of dedupe, compression, encryption conflict

-

what is the institutional cost of using technology that does not cover all clients equally vs the cost of not covering them at all?

-

what features in the proprietary software will actually be used? (Since we have such systems, we should be able to document this at least for Networker and Retrospect)

-

what is cost and timing of scaling? If we have to provide backups for a new department suddenly, what is the $ cost and the time lag?

-

if it’s possible to go out of compliance, what is the cost of doing so?

-

what legal recourse is there if the system fails in some way?

We are still in a preliminary mode for this evaluation, but if you have suggestions, queries, or would like to be notified of the final result and sent any documentation of the process, please let me know.

7.1. Contributed Suggestions

8.1. Books

The O’Reilly book Backup & Recovery: Inexpensive Backup Solutions for Open Systems is a good overview of some important considerations for a backup system. UC people can read it in its entirety here via O’Reilly Safari

8.2. Web sites

Curtis Preston, the author of the above book runs a backup-related site called

BackupCentral, which is a very good info clearing house / blog on all things backup.

8.3. Whitepapers

Of variable quality, some sponsored by vendors.

Backup on a Budget (PDF) - by the ubiquitous Curtis Preston. Reiteration of many of his points, especially pointed to the fact that for many organizations, backup is not rocket science, people are the most expensive thing you pay for, and that backup to clouds may be useful (but mostly in rare occassions).

8.4. Useful(?) individual pages

8.4.1. fwbackups

Via TechRepublic’s 10 outstanding Linux backup utilities. Very short list, with some interesting choices. fwbackups is a very slick little program writ in Python/GTK that can work on all platforms (but GTK on Windows requires the whole GTK lib). It’s not an enterprise system, but if you have a Personal Linux box to back up it’s pretty straightforward, and can use rsync/ssh to encrypt over the wire. However, it does require your own shell login and dedicated dir space on a server.

Boxbackup is not in the running for an enterprise backup system, but is interesting for near-realtime backup for a small group of clients.

The latest version of this document should always be here.