1. TLDR Summary

2. Executive Summary

-

There are lots of choices in commercial services & many do approximately what academic users need.

-

A few choices are Open Source Software (OSS), notably OwnCloud or Pydio that may be appropriate & cost-effective as a campus service.

-

Bandwidth and latency will play larger roles as BigData in research becomes more important. Both are much better within a campus than to remote commercial services. Academic sites have access to high-speed research networks and the difference in transfer speeds are on the order of 10-100x.

-

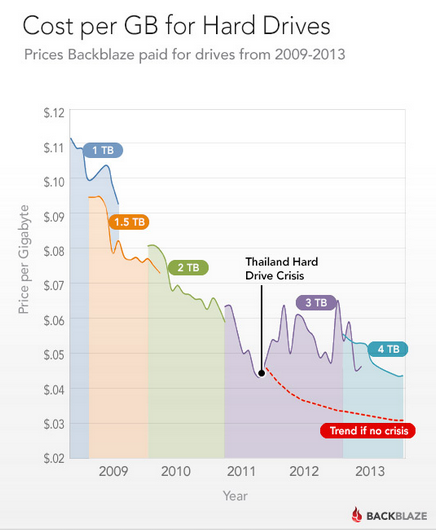

Raw storage costs continue to halve every 14 mo.

-

At the time of this writing, bare storage costs are roughly $100K/PB.

-

There are substantial technical differences between POSIX and Object filesystems and the differences should be understood.

-

Commercial "solutions" start at roughly 3x that cost and rise rapidly.

-

The difference between Open Source and proprietary solutions ones are often increased costs for the expertise to run Open Source systems vs proprietary appliances.

-

Administrative and user requirements are often at cross purposes and the end decisions are often administrative.

-

Any choice will result in a long-term vectoring and lock-in of substantial campus resources.

-

The best choice may not be an either/or but split use of both local and commercial services where one will keep the other honest and responsive.

3. Introduction

Academic file services have changed considerably over the years and administrative support for those services have not kept up. In response, many researchers have gone to easy-to-use commercial services like DropBox and Google Drive to satisfy their requirements, but even these have their limitations, legal as well as technical. Read a very good overview of the problem and solution that Dr. Crista Lopes of ICS has documented, albeit with much more resources and technology chops than most researchers have.

The first 6 sections of this document outline the important parameters of what an academic file service should provide, some background information, and enough points of contention to stimulate discussion. Please feel free to jump around using the ToC headers ←. The remaining sections are of interest probably only to geeks and system morlocks.

I will skip the obligatory warning of the ongoing and increasing data deluge except to say that academic research is especially susceptible to this increase in BigData and that a lot of it is unstructured (not a database) or semi-structured (often very large uncompressed ASCII text files). A PhD thesis in the field of Genomics these days can easily involve handling 100TB of data.

Data also has a value arc. Raw data tends to be large, then decreases in size and increases in value as it is analyzed, but often with significant bulges in size depending on the intermediate processes. This tyically means that active data is relatively large but published data is generally quite small.

Like any other organization, academics and students have personal and research files and these tend to be intermingled since academics typically mix their personal and professional lives on their devices - a variant on the Bring Your Own Device (BYOD) problem.

This document addresses this messy situation as a whole as much as possible. It addresses academic storage in particular, but unless there is a significant departure between academic needs and those of the general population, I won’t make a distinction.

3.1. Bandwidth and Latency

Most of the Cloud services mentioned here use network paths to/thru the commodity Internet with the result that streaming bandwidth to these services tops out at 1-2 MB/s, some considerably less. Streaming transfers thru Academic networks (Internet2, CENIC, CalREN, etc) can be considerably faster, especially with tuned and Grid-enabled applications (30-100MB/s, coast to coast), but few of the commercial resources have access to these networks unless requested by academic clients. When dealing with local-to-UCI machines, we can often get close to wire speeds for bandwith (10MB/s on 100MbE, 100MB/s on GbE. Similarly, the latency (the time for a signal to get from here to there) is much less for local services. Hence remote services will always seem very slow compared to local services. Caching proxies and other tricks can make this somewhat easier to live with, but this is a basic characteristic of networking, especially TCP-based networking.

This is made worse with multiple small file operations since each one requires some connection setup and teardown . For example, extracting the Linux kernel (626MB in ~60K files) from the distribution tarball takes about 11 sec on a local disk. The same operation via NFS to UCLA’s CASS storage resource takes about 77 min, a difference of about 400X. For networking speeds, local is better.

Further, many Local Area Network (LAN) protocols are blocked over Wide Area Networks (WANs). For example, the UCLA/CASS storage (below) can supply UCLA’s campus with SMB/CIFS file storage as the protocol is allowed inside the campus network, but is blocked external to their network. Hence UCI’s Mac and Windows clients cannot use the UCLA/CASS storage as a fileserver. UCI would have to run a local resource to provide that service.

3.2. Storage Costs

Raw disk costs continue their long-term characteristic of halving about every 14 mo.

This makes comparison of commercial services costs difficult except as snapshots, or over time to get normalized trends. However it may be worthwhile to describe the current costs of raw and usable storage on a local basis. Due to the offerings of the commercial services in chunks of 100GB, I’ll normalize the costs of raw storage to 100GB as well at the time of this writing (04/2015)

-

The end-user costs for a NAS-quality 4TB disk is $170, equaling $4.25/100GB. All of this storage is available to the end user if used as a USB drive, tho with no data protection.

-

a 250TB storage array chassis with 72x4TB disks is $35K (the loss of the raw capacity of 288TB is due to parity disks and hot spares). This resolves to $14/100GB initially, but over the course of the lifetime of 4yrs, it reduces to $3.5/100GB/yr.

-

currently, the cheapest commercial services for active data charge about $24/100GB/yr, or about 7x the basic cost of the storage.

-

the absolute pricing will decrease but the trends continue. Fore example, now (12/2016) the price for the 4TB disk is < $140.

4. Requirements for File Services

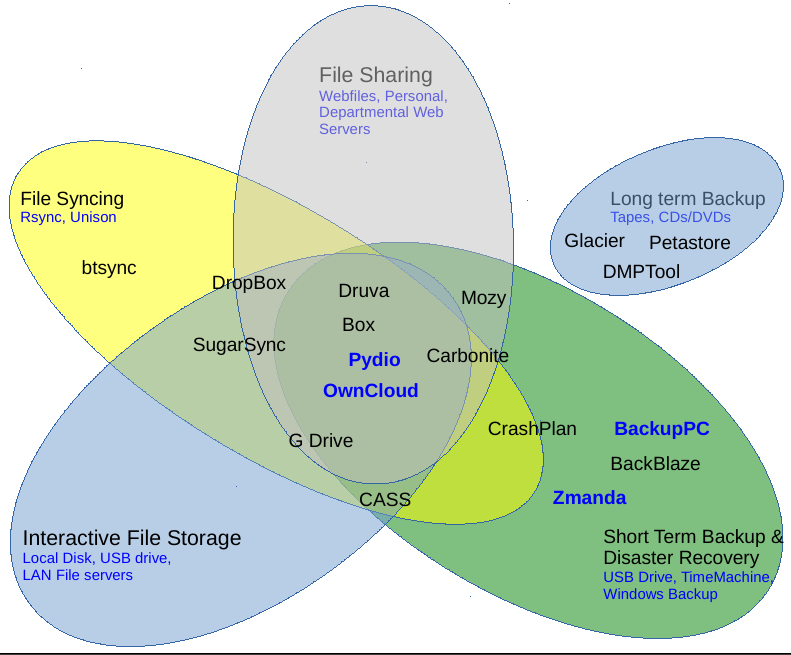

There are 5 rough and overlapping areas of storage functionality that I’ll address.

4.1. Interactive file storage

This file storage is most often associated with LAN-based file servers where a Windows, Mac, or Linux client remote-mounts a SMB/CIFS or NFS filesystem and interacts with it as if it was a local filesystem. Current cloud services such as Google Drive or DropBox emulate this functionality when using their native clients with the added ability to sync and/or share those files as well. This kind of interaction is particularly sensitive to network latency when the type of work involves moving large chunks of data such as nonlinear editing of multimedia files, slicing and dicing of satellite data, and querying relational databases.

It should be noted that these cloud services are almost always object file systems which only support the http put, get, and head commands. As such, when you ask for a file, you get the whole file and you have to write the whole file back. - no partial reads, no appends, no striding to offsets, etc. POSIX filesystems allow for much finer-grain file manipulation and many applications, especially in the research domain require such file access. Most native clients for such cloud services will read the whole file, cache it locally on the client’s POSIX FS, allow it to be manipulated with POSIX semantics, and then when finished, write it back to the cloud in toto, sometimes with some server side magic that emulates an rsync operation.

Object FSs are often used as buckets to dump large chunks of infrequently accessed data into because they are represented as simpler, cheaper, and more robust than POSIX FSs. That can be true, but there is a base cost of storage and often the cheap gets overwhelmed by the cost of the rest of the system.

4.2. File Sharing

This functionality is chiefly for collaboration where one party wants to share files with others. DropBox is a front-runner here, chiefly for the ease of its interface, but UCI has dabbled with this kind of service by providing the commercial Webfiles which provides a limited amount of storage via a creaky, cranky interface. Users can share files via the native application in most cases or via the web via URLs to the files (like DropBox, Google Drive, Box). Problems with both commercial and local implementations involve limitations on space and opaque interfaces so that it is difficult to intuit which users or groups can do what with files.

4.3. File Syncing

This area is somewhat fuzzy - it usually means that changes in one file are propagated to all files, with only the bits that have changed moving across the network. However, it could mean that the changes are realtime (as would be critical when co-editing a document) or delayed until a timed service activates (as is the case when a backup job starts). Different services interpret this to mean different things. Bittorent/Resilio Sync, DropBox, and others support variants of this approach, tho there’s considerable overlap with the apps in the next section.

4.4. Short Term Backup

This area covers short term backup such as incremental backups or snapshots (like Apple’s Time Machine) and personal disaster recovery. This often includes syncing files across devices and easy client-side-initiated recovery. Examples of this kind of service are the Open Source Software (OSS) packages BackupPC and Zmanda, and the commercial services CrashPlan and BackBlaze.

4.5. Long Term Backup

This addresses long term file archiving. Files go in, stay in until required for disaster recovery and include services like Amazon Glacier, BackBlaze, and CrashPlan. Often, the cost for these types of plans are fairly low but with the expectation (often codified in the Service Level Agreement (SLA)) that there will be little file retrieval without incurring additional costs. Especially in the case of Amazon Glacier, there are few client-side applications available to interact with the service directly, partly because it has no external interfaces - you currently have to go thru Amazon’s S3 storage system to access Glacier, so it is essentially an API that you have to provide code to support. There are some applications that provide Graphical User Interfaces to it, typically as part of S3-specific File Transfer Managers like CyberDuck, Dragondisk, and the like. It is unlikely that any self-hosted system could match the cost of such commercial long term archival services.

Wikipedia has a good Comparison of Cloud Backup Services in terms of features. Note that Glacier is not mentioned since it is an API, not a client-side service currently.

There is considerable overlap among these services - for example, Google Drive allows syncing, and file sharing as well as file storage and co-editing of documents, as does Microsoft’s SharePoint, which aims to be the file-everything for all things Microsoft. Druva is another that claims to be all-devices Enterprise-ready for sharing, syncing, & backup.

and how some of the services fit into it. Small text in Blue indicates how users might have performed such functions in the past. Large Blue text in the Venn diagram indicates Open Source packages or services that can be run locally. (LibreOffice diagram file here)

5. Reputation and Failure History

There have been cloud failures among all the major service providers, including Google’s 2013 Gmail failure, the widespread 2011 Amazon EC2 failures, Microsoft’s semi-continuous struggle to be able to scale their technology beyond the desktop, and the odd CrashPlan Crash.

Most of these were able to recover within hours, but in some cases, the outages extended into multiple days and in one spectacular case, Microsoft essentially let a large acquisition go out of business due to their inability to recover from a massive backup failure.

So whatever the hosting service, there will almost certainly be data losses. You picks your horses, you takes your chances. If your data doesn’t live in at least 2 (preferably 3) physically distinct places, it’s not safe.

6. Local or Commercial Services

There are strong advantages to both and it will require a close examination of what each offers and perhaps even a local survey of users to determine which is more appropriate. This can roughly be broken down into the pros and cons for Administrators and Users, who often have conflicting requirements.

6.1. Local Installation

6.1.1. Administrators

For administrators, there are a lot of costs involved in implementing a local service.

Pros

-

May be much cheaper in the long term, depending on uptake. This can possibly be done cheaply long term by using vanilla hardware, OSS systems, including already existing OSS cloud systems (OwnCloud, Openstack)

-

OSS systems prevent vendor lock-in.

-

Can use use scalable storage (BeeGFS, GPFS), so can scale up as storage as we need it, much like HPC storage system, with rsync to another on-campus system or geo-replication to a peer system in the UC system.

-

snapshotable / Time Machine functionality, using ZFS, LVM or other technologies

-

have the freedom to bill by usage, bill to individuals, to Departments, to Schools, etc

-

We can implement the kind of Security we want and implement the restrictions we need regarding HIPAA, FERPA, FISMA, and other restricted data, can provide encryption, LDAP authentication, etc

-

Local control may reduce legal costs by keeping everything under UC control.

-

Very attractive to funding agencies and archive requirements.

-

Very useful for data collaboration if the local installation can immediately provide web access to arbitrary files on the server-side.

Cons

-

Large installations are always non-trivial and expensive, even using OSS systems. Initial configuration, tuning, and edge cases are always problems (but locality allows local changes as needed without long wait times and/or lawsuites).

-

Do local personnel have the requisite expertise and understanding of large systems and the budget to support them to the correct level?

-

If not, would require more FTEs to support such a system. Probably 1 to start, more depending on uptake and services supported thru it.

-

Extra effort, expense to go into extra reliability, failover, High-Availability. This would be a mission-critical system, so doing it on the cheap would not be advised.

-

While we could buy good hardware cheaply, we would still not be able to buy it as cheaply as Google (but Google then upcharges for access, altho currently it provides essentially infinite - but slow - storage to UCI users).

-

Would require separate, additional services for short & long-term backup as opposed to file sharing options.

-

Underlying storage would have to grow at ~1-5 TB/day for a university-wide service.

-

Administrative recharge system would have to be developed, if not part of the infrastructure.

-

Commercial services might be able to offset their cost with advertising, and re-selling customer information, which would not be available to us.

6.1.2. Users

Pros:

-

Choice of protocol support; since it’s local, can make files available via NFS, CIFS, Globus, http, ftp, rsync, etc.

-

MUCH higher bandwidth available to the storage by 10-100 fold. Local clients could probably get close to full GbE wire speeds; high enough to do things like video editing directly from the service.

-

Recharge directly to grants or other administrative funds, so wouldn’t have to go thru 3rd parties.

-

Local control means local modifications. With user feedback, we could change rules, quotas, groups, etc to make it much more usable much more quickly than commercial services

-

Local Humans on the other end of a support call.

-

Local installations prevent widespread personal information-harvesting and resale by cloud vendors.

Cons:

-

Not many cons.

-

University might have to charge higher prices to cover extra FTE, hardware initially.

6.2. Commercial Systems

6.2.1. Administrators

Pros

-

Cheap in the short term but possibly expensive in the long term in aggregate.

-

However, the Admin can offload legal exposure and liability to service provider

-

Little HR liability

-

No Data Center & power requirements.

-

Can offload most expense onto clients directly.

-

Typically very reliable (except that all major providers have suffered fairly large outages and some have been catastrophic.

Cons

-

May require networking upgrades to allow better connectivity

-

Wider perception that inability to provide such services indicates overall lack of ability. (What can OIT do, beside push the outsource button?)

-

Little control over types of files, legality, exposure, administration, etc.

-

Almost no enforceable legal recourse.

6.2.2. Users

Pros

-

Can start using a well-debugged system immediately.

-

Much cheaper in the short term than a system they could put together themselves.

-

In general, the best systems work quite well, atho they may be very expensive for large-scale data sharing.

-

Application support much better than with a local installation.

Cons

-

No human on the other end for technical support.

-

Limits on file sizes may inhibit utility.

-

Total storage costs may become very high for sharing large amounts of data.

-

Flex/Spike usage impossible - you tend to have to buy into the next highest tier.

7. Local Sharing Services

There is a fairly long personal evaluation of some of the following self-hosted cloud file services posted recently (Sept 22, 2014) here. It includes links to other evaluations and even a long podcast or two. The upshot for now is that while some of these services would probably be a good fit for a research group or even department, none of them are stable enough or scale well enough for a UC campus.

7.1. OwnCloud

The most popular local sharing service is called OwnCloud and it is available as source code, as a Bitnami installation package, and as an Amazon service. It has Windows, Mac, and Linux desktop clients, as well as iOS and Android clients. It uses Web Distributed Authoring and Versioning (aka WebDAV)for most of the file transport protocol, allowing connectionless access to files. This is a fairly low performance protocol, but since the service operates at near-LAN speeds, it can still be quite useful. Both network traffic and storage is encrypted. The file-level encryption is useful if you then share files outside of OwnCLoud - the files stay encrypted until the reader provides the decryption key.

It also allows you to add storage from various cloud services (Dropbox, SWIFT, FTPs, Google Docs, S3), tho that seems a strange attraction for a service whose main feature is that it’s locally based).

OwnCloud is old enough that it has developed apps that make it possible to co-author (in RichText) documents with up to 5 authors, much like Google Docs.

A recent trade rag article (free reg req) and a Linux-oriented podcast that spends a good amount of time on it (starts ~1m:36s into the podcast).

7.2. Pydio

Pydio is very similar to OwnCloud with an arguably better user interface and reportedly better performance on large numbers of files. However, it lags OwnCloud in development time and therefore installations, and therefore up to date reviews. We will be testing it as well as the ongoing OwnCloud.

7.3. UCLA / CASS

UCLA has developed a large campus storage system that provides local interactive file storage via NFS and SMB/CIFS as well as a Globus endpoint and a CrashPlan server (see below) that requires extra cost licenses to use it. They require a minimum purchase of 1TB (large relative to other services) for $171/yr, which is cheap for the space ($17/100GB/yr), but it’s a lot of space. UCLA is networkologically close to UCI and while we can attain very fast streaming transfer (up to ~90MB/s via Globus Connect, ~55MB/s via NFS). However, while Globus can be used by all clients, NFS is generally used only by Linux/Unix clients. The preferred connection protocol for Mac and Win clients (SMB/CIFS) is blocked across most Wide Area Networks and so UCI clients can not currently use UCLA’s storage for interactively used files. Additionally, while streaming large files works well, manipulation of large numbers of small files is very slow due to the file and IO overhead.

8. Local Backup services

I have written about using local backup services at length here. It’s 5 years old, but all of the services are still available (unlike many commercial products that old) and the rationale is still valid.

9. Commercial Long Term Backup Services

9.1. Amazon Glacier

As noted above, Glacier is not currently a client-facing service; it was developed as the backup system for Amazon’s S3 storage system and therefore is usually accessed programmatically internal to the EC2 system. More recently, external developers have provided Hierarchical Management Software that can push data directly to Glacier.

Glacier is designed with the expectation that retrievals are infrequent, that data will go into it and stay there. You can retrieve up to 5% of your average monthly storage for free each month. More on the fee structure here.

The cost of Glacier storage is almost infinitesimal for individual docs - for a 100GB home dir, only a $1. However, altho you get free transfer to AWS in same region, retrieval costs are pro-rated and complex and while they can be reduced by spreading them over time, retrieval will generally be on the order of $10s, not $100s. However, when compared to the loss of 100GB of personal data, this is dirt cheap, if partially offset by the difficulty and time to retrieve it.

9.1.1. Glacier Clients

While Amazon has not released any Glacier clients, others have used the API to create a few user-oriented clients, including

-

Cyberduck, which has an Amazon S3 client, which can push data into the Glacier staging area.

-

FastGlacier (Windows only)

-

GlacierUploader, a multi-platform client

-

a variety of other, mostly single-author, aging clients.

All the clients besides FastGlacier and CyberDuck are single-user projects and not well supported.

While Glacier is very cheap, it is not trivial to access and use, even with Step-by-Step Instructions. Therefore, its use may be beyond the ability (and certainly the patience) of most faculty.

10. Commercial Short Term Backup Services

These services are mostly for short term backups and many of them have explicit language in the SLAs that if you do not continuously use their service, they will delete your data after a few months of inactivity.

10.1. Backblaze

Backblaze has an interesting business model - they build their own infrastructure and are remarkably open about how they carry out their business. They also bill on a statistical model which allows unlimited backup for $5/mo (currently) and while they only support Macs and Windows, their client is very easy to configure and use, with much clearer documentation than CrashPlan. The client is very snappy and does not give misleading estimates of time to complete, just what’s been done. BackBlaze also allows you to back up local USB disks and (for extra $) will also ship your files back to you on a disk .

10.2. CrashPlan

The CrashPlan combined client/server is writ in Java and runs smoothly on all platforms. It’s easy to install, tho impossible to select the free for 30 day trial; like many such free trials you have to have to 1st buy the month-by-month plan.

The GUI is somewhat confusing since when you select Backup, doesn’t execute directly but waits for idle time, configurable via settings but not clearly explained. The backup time to the CrashPlan cloud, was ~3hr for 14K files (9.1GB). CrashPlan tries to estimate how long a backup will take, but since it depends on many variables, it’s almost a random guess.

A notable point about the CrashPlan system is that the free client is also a server. You can install the same software to your own server and back up multiple clients to that server simply by providing the invitation key, using the same interface that the commercial service provides. This allows you to use a local server, speeding up backups by a factor of 10-20 fold. This in itself is a very useful feature for a small to medium sized research group.

10.2.1. CrashPlan UCLA

UCLA is offering a Crashplan client that backs up to it’s campus storage system. You have to rent the storage space for $170/TB/yr + the CrashPlan license is an extra $36 for a total of $206/TB/yr (or $21/yr/100GB - still very cheap - comparable to Google Drive - but this service offers only CrashPlan and there are few additional features, unlike Google Drive.

10.3. Mozy

Mozy (now owned by EMC), provides simple free, tiny account, hoping that you upgrade to a more expensive one. A basic personal plan costs $100/100GB/yr). It has a pretty good interface (Mac, Win, iOS, Android) for de/selecting files and backup in progress. Like a few others, Mozy lets you use their interface to back up to local USB disks with the same interface.

11. Commercial File Sharing Systems

11.1. DropBox

DropBox puts the emphasis on Sharing rather than backups. However it is possibly the best known, widest multi-platform interface and is in widespread use at UCI and elsewhere due to both its business plan and the ease of use and integration of its clients. It also has gone further than most in integrating with other large cloud platforms such as FaceBook and Twitter.

11.2. SugarSync

SugarSync is also very easy to use, with the emphasis on syncing rather than backups. It has no restrictions on directory structure (not necessary to put all the files you want to share in one dir) which helps to differentiate it from most other services. It has no maximum file size which is good for sharing large data files, but is fairly expensive ($100/yr/100GB/person). No Linux support.

11.3. Google Drive

Google Drive provides Windows and Mac clients, but only limited Linux utility via 2nd sources. The native clients integrate fairly well with the native filesystem and allow you to use Drive as an additional storage if the files are small. If using gmail, you can search across mail and uploaded files. Also available for chromebook access. Has some included apps including spreadsheets, WP, etc. ($24/100GB/yr)

The Linux clients are commandline only: grive (a commandline Github project last updated in 2013), and drive. The actual bandwidth to Google is extremely Internet-dependent. On the day I was using it, it could move data at ~160-500KB/s ; downloads were much faster, but only up to about 1-2MB/s.

It’s possible to attach Google Drive to large Linux systems as described [here], but bandwidth is still quite slow, about 3-5MB/s for large files.

11.4. Druva

Druva is much more business-oriented than most such services, with emphasis on encryption, multiple users, reliability, support for business-oriented devices, and speed. It uses in-line deduplication to speed backups and reduce their size. These features would have limited advantages for research data.

11.5. Amazon Cloud Drive

Amazon Cloud Drive is the consumer version of their S3 Cloud storage with various add-ons like auto-ripping CDs that you’ve bought from them (~$50/100GB/yr). And Amazon Zocalo is the business version ($30/100GB/yr).

11.6. Apple iCloud

iCloud is designed primarily for buyers of Apple’s iDevices, so their offerings are less price-sensitive than others and emphasize integration with Apple iProducts and social media sharing. Their current pricing is \~$60/100GB/yr, almost 3X Google’s cost.

11.7. Box

Box is more business oriented, with plans including multiple user access ($60/100GB/yrw/ for 3-10 users, with 2GB maximum file size). Regardless of plan, it has a 5GB maximum file size.

11.8. Copy.com

Copy.com provides MacWinLin clients, sharing among multiple users, with the cost starting at a normalized ~$40/100GB/yr

12. OneDrive (nee' SkyDrive)

OneDrive is Microsoft’s attempt at taking on the Cloud space. It is competitive with the cheapest other cloud systems (at $24/100GB/yr) and if you buy 1TB of space for a year, you get the dubious pleasure of Office 365 for free. OneDrive offers Windows clients of course as well as Mac, iOS, and Android support, but despite Microsoft’s recently professed love for Linux, support for the Linux Cancer is missing.